In this world where it is almost tough to trust people now, social media plays a big role in deciding if you are in a safe space or not. Social media handles like Facebook and Instagram have a very important role to make sure that the user is safe.

Meta shared how they protect their teen users yesterday on their blog.

“Today, we’re sharing an update on how we protect young people from harm and seek to create safe, age-appropriate experiences for teens on Facebook and Instagram.”

Updates to Limiting Interactions You Don’t Want

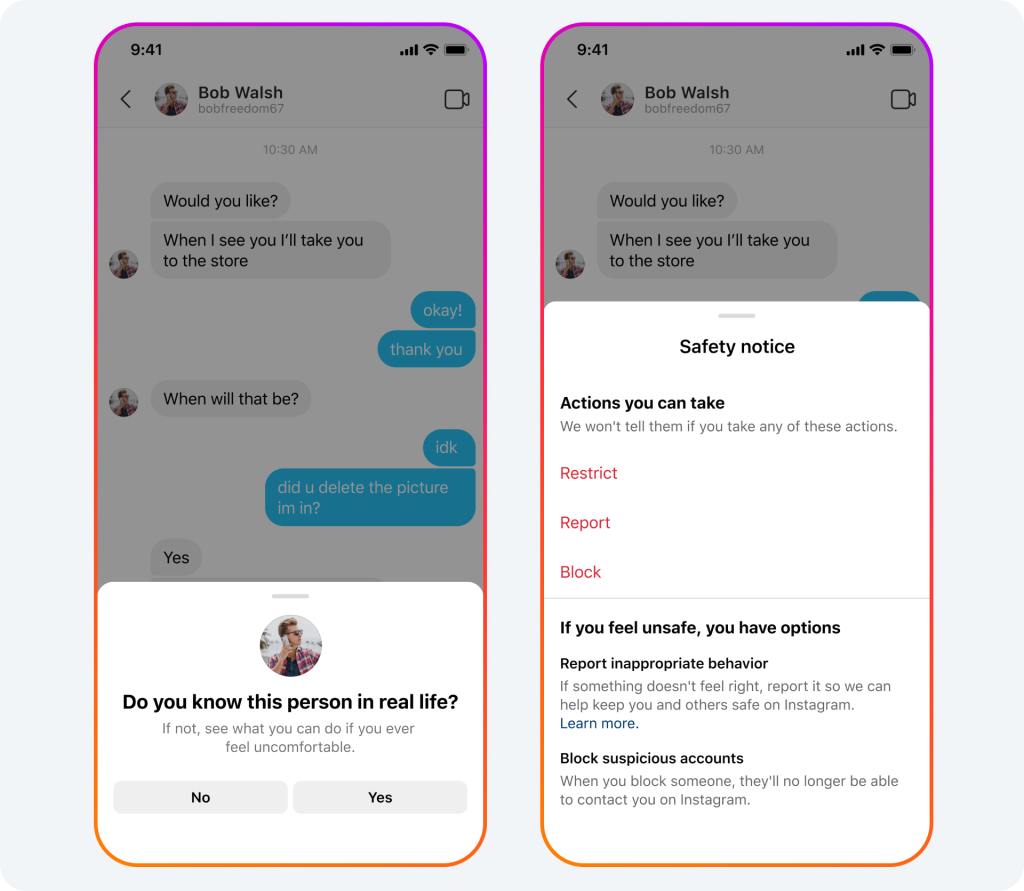

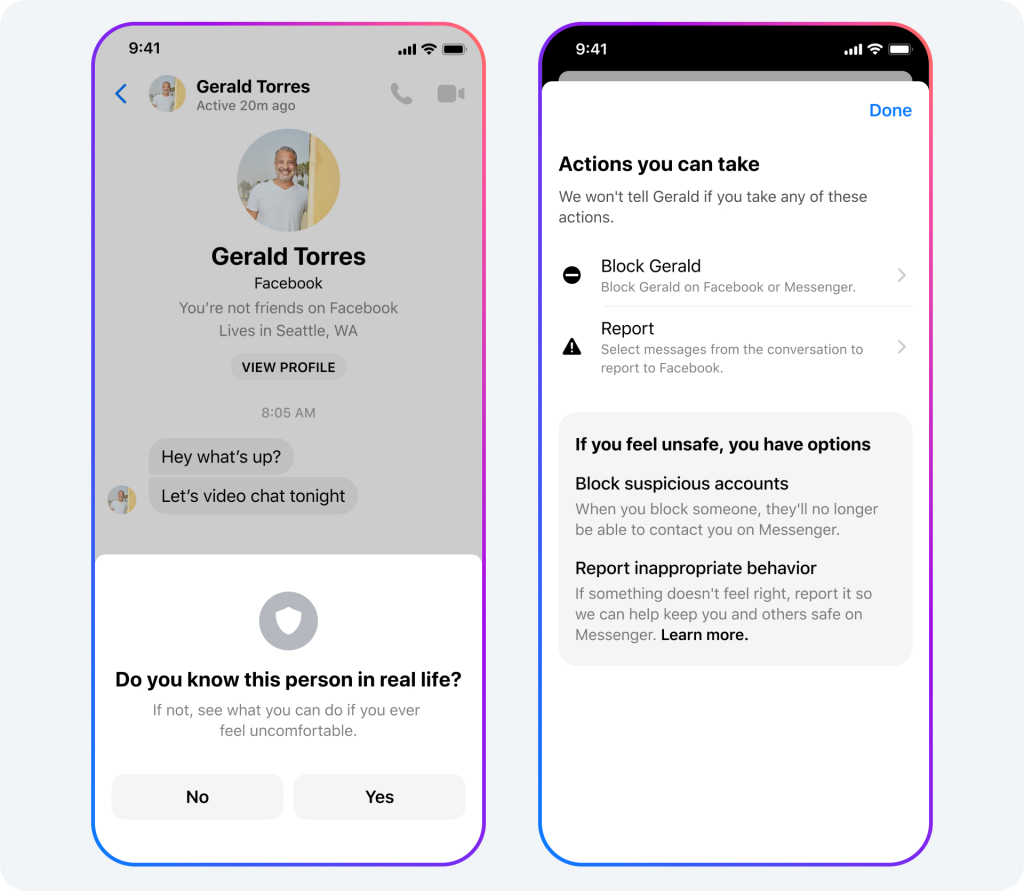

They discussed some of the precautions they take to keep teenagers from interacting with doubtful adults last year. They forbid adults from emailing teenagers they are not connected to or from viewing teenagers in their People You May Know suggestions, for instance. In addition to our current safeguards, they are currently researching techniques to prevent teenagers from messaging questionable adults they are not related to, and it won’t list them in teenagers’ suggestions for “People You May Know.”

An adult account that has recently been blocked or reported by a child, for instance, qualifies as a “suspect” account. We’re also experimenting with eliminating the message button from teen Instagram accounts when they are seen by adults as an additional degree of security.

Updates to Limiting Interactions You Don’t Want

They discussed some of the precautions they take to keep teenagers from interacting with doubtful adults last year. They forbid adults from emailing teenagers they are not connected to or from viewing teenagers in their People You May Know suggestions, for instance.

In addition to their current safeguards, they are currently researching techniques to prevent teenagers from messaging questionable adults they are not related to, and it won’t list them in teenagers’ suggestions for “People You May Know.” An adult account that has recently been blocked or reported by a child, for instance, qualifies as a “suspect” account. They’re also experimenting with eliminating the message button from teen Instagram accounts when they are seen by adults as an additional degree of security.

Also Read: WhatsApp expands in-stream payments and new business search functionality!

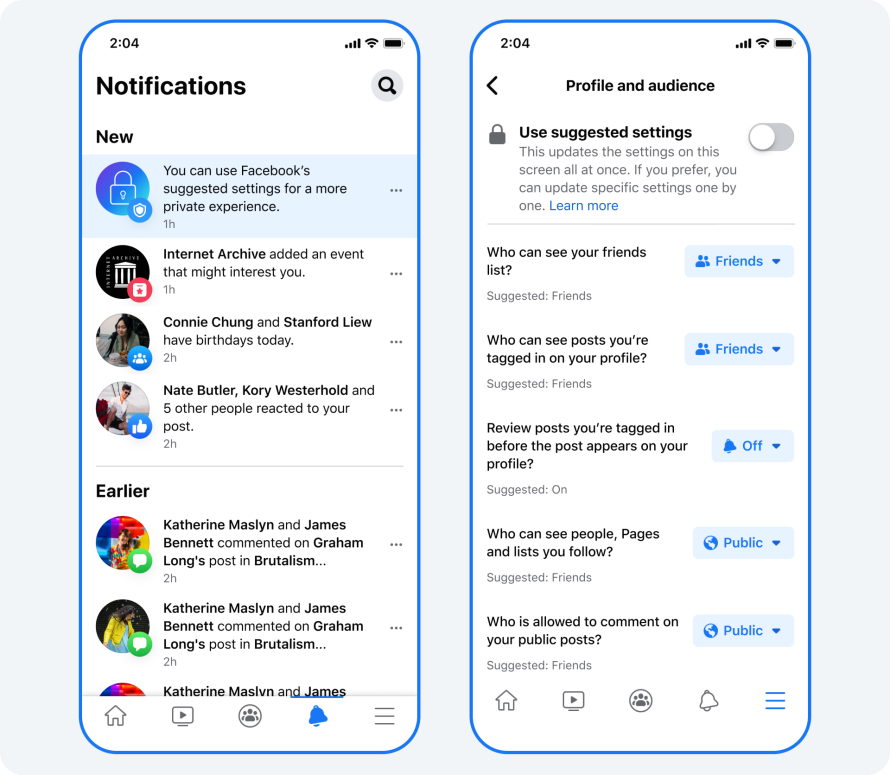

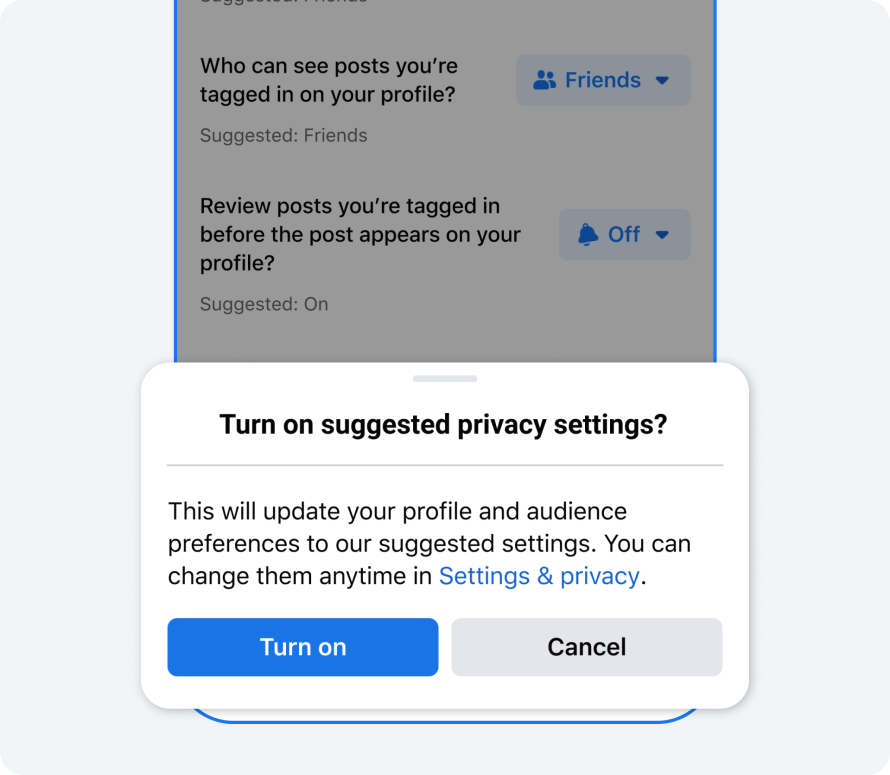

New Privacy Defaults for Teens on Facebook

They also shared what would be the ‘Defaults’ for teens online.

“Starting today, everyone who is under the age of 16 (or under 18 in certain countries) will default into more private settings when they join Facebook, and we’ll encourage teens already on the app to choose these more private settings for:

- Who can see their friends list

- Who can see the people, Pages and lists they follow

- Who can see posts they’re tagged in on their profile

- Reviewing posts they’re tagged in before the post appears on their profile

- Who is allowed to comment on their public posts”

This move comes on the heels of rolling out similar privacy defaults for teens on Instagram and aligns with our safety-by-design and ‘Best Interests of the Child’ framework.

New Methods to Halt Teens’ Intimate Images from Spreading

They are also providing an update on our efforts to restrict the internet distribution of personal teen pictures, especially when such images are used to exploit teens, a practice known as “sextortion.” They want to do everything they can to prevent kids from sharing personal photographs on our applications in the first place because the non-consensual sharing of such images may be incredibly distressing.

“We’re working with the National Center for Missing and Exploited Children (NCMEC) to build a global platform for teens who are worried intimate images they created might be shared on public online platforms without their consent. This platform will be similar to the work we have done to prevent the non-consensual sharing of intimate images for adults. It will allow us to help prevent a teen’s intimate images from being posted online and can be used by other companies across the tech industry. We’ve been working closely with NCMEC, experts, academics, parents, and victim advocates globally to help develop the platform and ensure it responds to the needs of teens so they can regain control of their content in these horrific situations. We’ll have more to share on this new resource in the coming weeks.”, the blog mentioned.

“We found that more than 75% of people that we reported to NCMEC for sharing child exploitative content shared the content out of outrage, poor humor, or disgust, and with no apparent intention of harm. Sharing this content violates our policies, regardless of intent. We’re planning to launch a new PSA campaign that encourages people to stop and think before resharing those images online and to report them to us instead. “

Anyone seeking support and information related to sextortion can visit their education and awareness resources, including the Stop Sextortion hub on the Facebook Safety Center, developed with Thorn. They also have a guide for parents on how to talk to their teens about intimate images on the Education hub of our Family Center.