Today at Search On, Google discussed how advances in machine learning are bringing us closer to creating search experiences that represent how people as a whole make sense of the world. With richer knowledge of the information in all of its forms—from language to visuals to items in the physical world—we can open up completely new avenues for aiding individuals in their information gathering and exploration.

We’re developing visual search to be more natural than ever before and assisting consumers in more naturally navigating information. Look at this more closely.

Helping you search outside the box

“With Lens, you can search the world around you with your camera or an image. (People now use it to answer more than 8 billion questions every month!) Earlier this year, we made visual search even more natural with the introduction of multi-search, a major milestone in how you can search for information. With multisearch, you can take a picture or use a screenshot and then add text to it — similar to the way you might naturally point at something and ask a question about it. Multisearch is available in English globally, and will be coming to over 70 languages in the next few months.” wrote Google.

They also added, “One of the most powerful aspects of visual understanding is its ability to break down language barriers. With Lens, we’ve already gone beyond translating text to translating pictures. In fact, every month, people use Google to translate text in images over 1 billion times, across more than 100 languages.”

With major advancements in machine learning, They will be able to blend translated text into complex images, so it looks and feels much more natural. Machine learning models will do all this in just 100 milliseconds — shorter than the blink of an eye using generative adversarial networks (also known as GAN models), which is what helps power the technology behind Magic Eraser on Pixel. This improved experience is launching later this year.

At Google I/O, we previewed how we’re supercharging this capability with “multisearch near me,” enabling you to snap a picture or take a screenshot of a dish or an item, then find it nearby instantly. This new way of searching will help you find and connect with local businesses, whether you’re looking to support your neighborhood shop, or just need something right now. “Multisearch near me” will start rolling out in English in the U.S. later this fall.

Also Read: The game handles by Netflix for user ease!

One of the most powerful aspects of visual understanding is its ability to break down language barriers. With Lens, we’ve already gone beyond translating text to translating pictures. In fact, every month, people use Google to translate text in images over 1 billion times, across more than 100 languages.

With major advancements in machine learning, we’re now able to blend translated text into complex images, so it looks and feels much more natural. We’ve even optimized our machine learning models so we’re able to do all this in just 100 milliseconds — shorter than the blink of an eye. This uses generative adversarial networks (also known as GAN models), which is what helps power the technology behind Magic Eraser on Pixel. This improved experience is launching later this year.

And now, starting with the Google app for iOS, they will be putting some of their most useful tools right at your fingertips. You’ll see shortcuts for shopping your screenshots, translating text with your camera, humming to search, and more directly beneath the search box.

New ways to explore information

They also wrote about how they’re aiming to make it possible for you to ask questions with fewer words, or even none at all, and we’ll still grasp exactly what you mean, or surface information you might find useful, as we reimagine how people search for and engage with information. Additionally, you can study material presented in a way that makes sense to you, whether that means learning more about a subject as it develops or coming across fresh viewpoints that broaden your perspective.

Being able to locate the results you’re looking for fast is an essential component of this. We’re launching an even quicker approach to finding what you need in the upcoming months.

“But sometimes you don’t know what angle you want to explore until you see it. So we’re introducing new search experiences to help you more naturally explore topics you care about when you come to Google.”

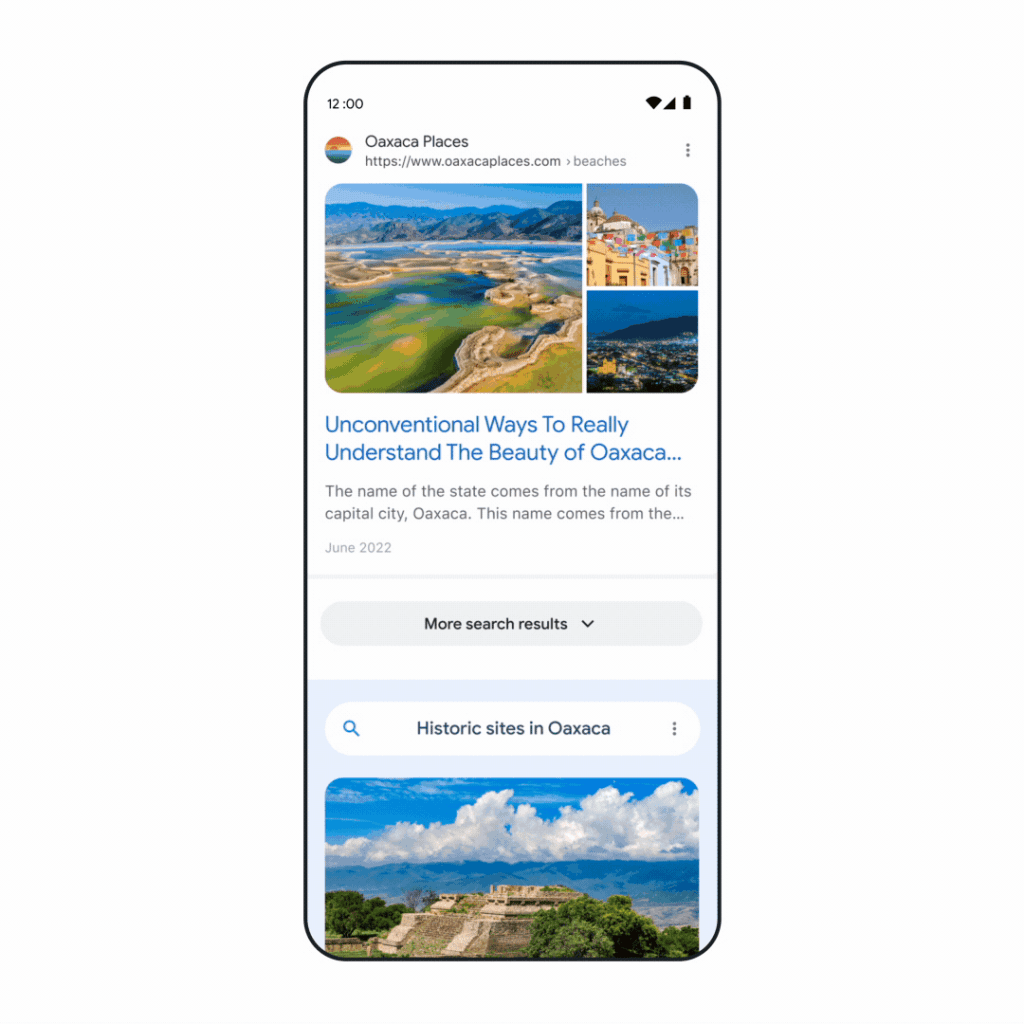

Additionally, they will soon present to you themes to help you go deeper or uncover a new angle on a subject thanks to our profound expertise of how people search. When you wish to zoom in or out, you can also add or delete topics. The nicest aspect is that it might open your eyes to ideas you hadn’t previously considered. In order to better reflect the ways in which people research issues, they are also redesigning how we present the results. No matter what format the information is in – text, photos, or video — you’ll see the most pertinent content, from a number of sources. Additionally, as you read further, a new way to get inspired by related topics to your search. For instance, you may never have thought to visit the historic sites in Oaxaca or find live music while you’re there.

These new ways to explore information will be available in the coming months, to help wherever your curiosity takes you.

With these major advancements in process, they also shared a video where they’re talking about what all is new and how is that going to make it easy for all.

“We hope you’re excited to search outside the box, and we look forward to continuing to build the future of search together.”- Google Blogs.