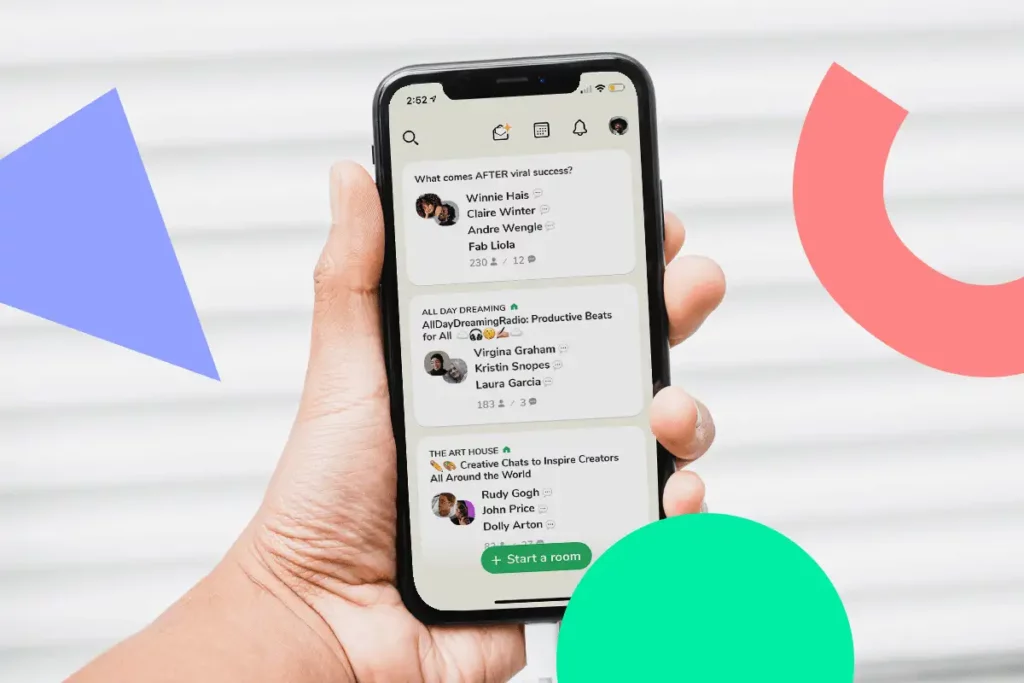

Making it official as per Clubhouse Blog, there’s a Clubhouse New Update where – ‘Making the hallway more relevant with machine learning’

Earlier when opening the Clubhouse app you would witness a hallway with rooms based on a simple algorithm depending on the number of your friends in that room or the topic that you closely relate to.

Also Read: All about Sonam Babani aka fashioneiress’ much talked about icy wedding in Switzerland

” Our Gradient Boosted Decision Tree (GBDT) ranking model is trained on hundreds of features that quantify various attributes of your activity in past rooms. For instance, the model looks at whether you spend more time in small private vs. large public rooms or whether you speak often or prefer to listen, and uses these to rank rooms optimally for you. The model also looks at features of the room itself, like its duration and the number of participants, as well as features of the club if it’s a club room. The ranking model also accepts user and club embedding vectors as inputs. These embeddings are trained on user-club interaction data to build dense representations of users and clubs. “

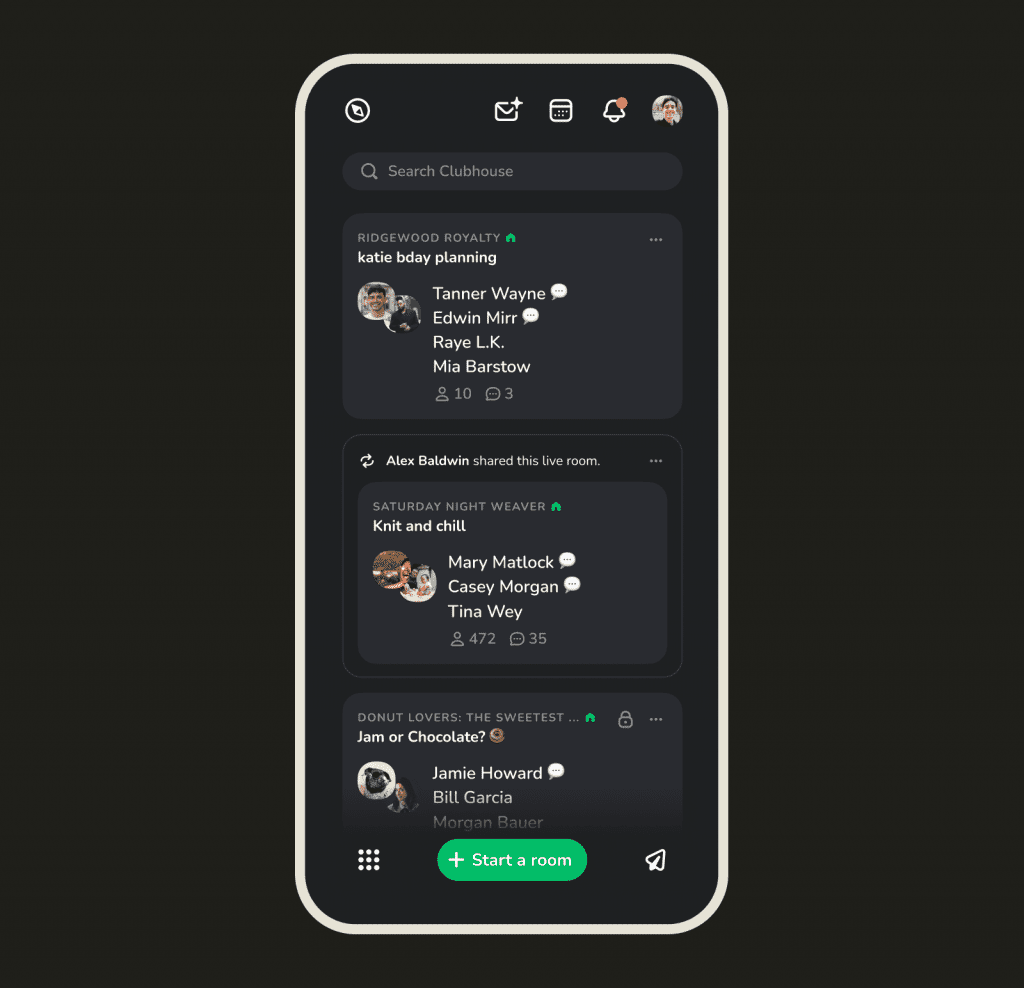

Capturing changes to Clubhouse rooms in real-time

Many of the features used by the ranking model are slow-moving batch features, which is to say that they are computed relatively infrequently, maybe once every few hours or once a day. But live rooms on Clubhouse are ever-evolving; a room might be winding down with people saying their goodbyes and heading out. Or a room might be blowing up because Oprah just dropped in. Slow-moving features don’t capture these changes, so we include fast-moving, streaming features into the model to keep it abreast of the latest changes to rooms when ranking them. We’ve built a simple but powerful framework to compute streaming features from individual events that fire every time there is a change to a room. The framework lets us aggregate events into interesting features like the rates of change in room sizes, the total number of rooms you’ve seen in the last 10 minutes, or the number of links that have been pinned in a room. These fast features — as we call them — are logged at inference time, so that we can use historical values to train future iterations of the model.

High-speed model inference

Every time you pull to refresh the hallway, our servers fetch and run model inference on hundreds of features across hundreds of rooms and send the results back to the iOS and Android apps. This can be a prohibitively resource-intensive and slow process, so we’ve taken some steps to make sure users get the most snappy experience possible. We use a simple memory-backed feature storage mechanism, to make sure fetching model features does not take too long. We’ve also spun up a lightweight, stateless microservice responsible solely for model inference. Our server fetches features and ships them to this service, and receives the model scores to be used for ranking. This helps us isolate resource-intensive model inference from the core server and scale it independently.

Looking ahead

We’re always experimenting with ways to make ranking even better. Incorporating user and channel embeddings and audio transcripts, training larger models, and improving our machine learning infrastructure are some of the exciting next steps we’re thinking about. Thanks for reading, and we hope to see you around the hallway!

( Source: Clubhouse Blog)