OpenAI certainly introduced the majority of the world to Artificial Intelligence (AI) through its ChatGPT models. But that seems to be just scratching the surface. The tech company has only been scaling its AI operations ever since with an upgrade or new application every now and then. And the latest offering by OpenAI is Sora: a text-to-video tool.

Sora is a new artificial intelligence tool that can create realistic 60-second videos on the basis of text prompts. Translating to ‘sky‘ in Japanese, Sora can produce realistic videos of up to a minute after taking instructions from a user on the style and subject of the clip. The videos contain a watermark which shows that the clip is made by AI.

Introducing Sora, our text-to-video model.

— OpenAI (@OpenAI) February 15, 2024

Sora can create videos of up to 60 seconds featuring highly detailed scenes, complex camera motion, and multiple characters with vibrant emotions. https://t.co/7j2JN27M3W

Prompt: “Beautiful, snowy… pic.twitter.com/ruTEWn87vf

Also Read: AI-Generated Products Make Their Way Into TikTok and YouTube Videos

What Is Unique About Sora?

Helmed by Sam Altman, the AI company shared a blog post explaining Sora’s ability to generate realistic videos using still images or existing footage provided by the user for reference. “We’re teaching AI to understand and simulate the physical world in motion, with the goal of training models that help people solve problems that require real-world interaction,” said OpenAI in the blog.

“Sora is able to generate complex scenes with multiple characters, specific types of motion, and accurate details of the subject and background. The model understands not only what the user has asked for in the prompt, but also how those things exist in the physical world.”

Prompt: “Animated scene features a close-up of a short fluffy monster kneeling beside a melting red candle. the art style is 3d and realistic, with a focus on lighting and texture. the mood of the painting is one of wonder and curiosity, as the monster gazes at the flame with… pic.twitter.com/aLMgJPI0y6

— OpenAI (@OpenAI) February 15, 2024

One thing that may set Sora apart is its ability to interpret long prompts – including one example that clocked in at 135 words. The sample video shared by OpenAI demonstrated how Sora can create a variety of characters and scenes, from people and animals and fluffy monsters to cityscapes, landscapes, zen gardens and even New York City submerged underwater.

This is thanks in part to OpenAI’s past work with its Dall-E and GPT models. Sora borrows Dall-E 3’s recaptioning technique, which generates “highly descriptive captions for the visual training data.” The sample videos shared by the tech company do appear remarkably realistic. “The model understands not only what the user has asked for in the prompt, but also how those things exist in the physical world.”

Where It Falls Short

OpenAI acknowledged that Sora has its weaknesses too, like struggling to accurately depict the physics of a complex scene and to understand cause and effect. “For example, a person might take a bite out of a cookie, but afterward, the cookie may not have a bite mark,” the post said. The text-to-video tool mixes up left and right as well.

Before releasing the tool to the general public, the company wishes to take “several important safety steps” first. That includes meeting OpenAI’s existing safety standards, which prohibit extreme violence, sexual content, hateful imagery, celebrity likeness and the IP of others.

The Emerging Sector

Text-to-video has become the latest arms race in generative AI. Tech giants like OpenAI, Google, Microsoft and more look beyond text and image generation and seek to cement their position in a sector projected to reach $1.3 trillion in revenue by 2032. It is also an attempt to win over consumers who’ve been intrigued by generative AI since ChatGPT arrived over a year ago.

Internet Reacts

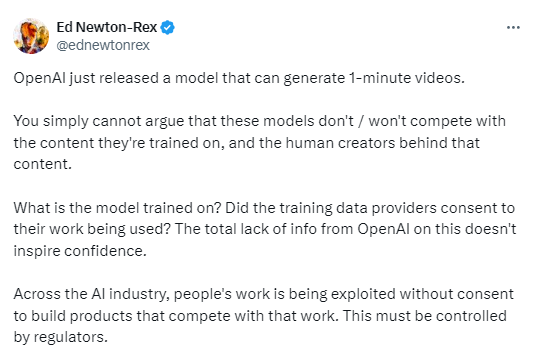

Reactions to Sora on X can be divided into two camps: “Is Sora going to make video production obsolete?” and “How can I try it?” Answering the initial question will be a tough, long fight for artistic rights, requiring regulation at the highest level. As for the second question, you can’t try it yet. While Sora was publicly announced yesterday, OpenAI says it’s still in the red-teaming phase.

The tech company is granting access to a select group of “visual artists, designers, and filmmakers to gain feedback on how to advance the model to be most helpful for creative professionals.” The tool is being adversarially tested to make sure it doesn’t produce harmful or inappropriate content. This is to ensure that creative professionals can benefit from the technology, rather than be replaced by it.

https://t.co/qbj02M4ng8 pic.twitter.com/EvngqF2ZIX

— Sam Altman (@sama) February 15, 2024

While those in awe of the technology cannot stop raving about it. As Sam asked people to reply with captions for videos they would like to see and make them, everyone got busy thinking of the most bizarre prompts. A user commented “Thank you, OpenAI has literally democratized cinema. Now, everyone can be an artist and create their own movies going forward. This will drastically reduce the exorbitant expenses of Hollywood, especially for low-quality content with high costs.”

The AI model is also getting a lot of flak since it could put many careers at risk. “man this is the worst time to be wanting to go into an art related career isn’t it” wrote an X user. “No please stop my dream was to make cartoons and I don’t want to lose that” said another. A third user wrote, “As someone who used to do animation there is so many things wrong with this scene.”

Will Sora really be a threat to animators or will it just end up being a fad that dies? We won’t really know until Sora becomes publicly available and leveraged by businesses. How do you feel about OpenAI’s Sora? Let us know in the comments!